What is a search engine? History of search engines

Source: Shangpin China |

Type: website encyclopedia |

Time: June 16, 2014

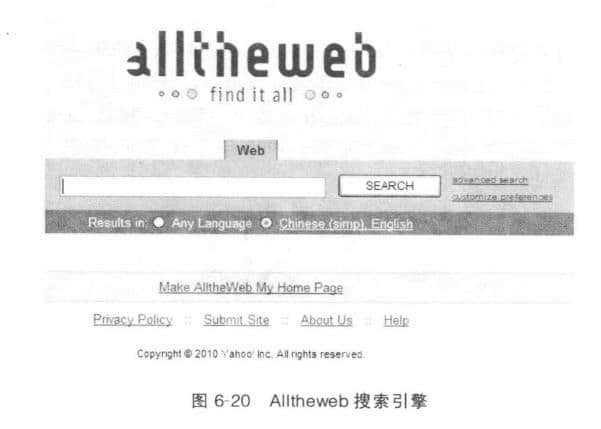

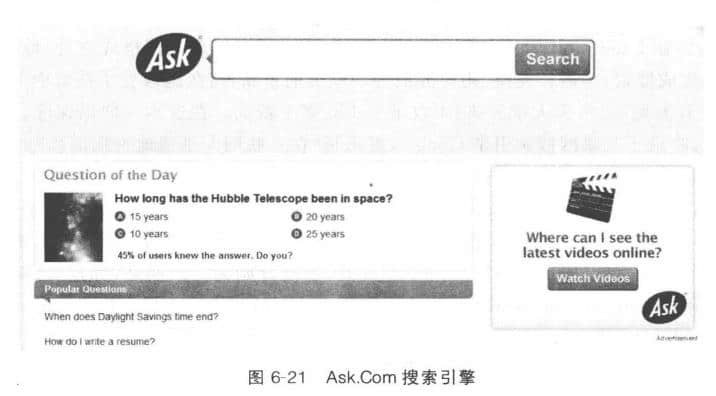

Search Engine refers to a system that collects information on the Internet according to certain strategies and specific computer programs, organizes and processes the information, and displays it to users to provide them with retrieval services. At present, search engine has become one of the necessary tools for people to surf the Internet. To sum up, search engines work by capturing Website production Pages, web page processing and retrieval services. Each independent search engine has its own web page crawler program (Spider), which continuously crawls web pages along hyperlinks in web pages. The captured webpage is called webpage snapshot. Because hyperlinks are widely used on the Internet, theoretically, most of the web pages can be collected from a certain range of web pages. After the search engine catches the web page, it needs to do a lot of preprocessing work to provide retrieval services. Among them, the most important is to extract keywords and establish index files. Others include removing duplicate pages, analyzing hyperlinks, and calculating the importance of pages. When a user enters a keyword for retrieval, the search engine finds a page matching the keyword from the index database. In order to facilitate the user's judgment, in addition to the page title and URL, a summary and other information from the page will also be provided. 1. History of search quotation The ancestor of all search engines is Archie, which was invented by Alan Empage, Peter Deutsch and Bill Wheelan, students of McGill University in Canada in 1990. Although the www was not widely used at that time, file transmission in the network was quite frequent, and because a large number of files were scattered in various decentralized FTP hosts, it was very inconvenient to query. Alan Email and others wanted to develop a system that could find files by file names, so Archie came into being. Archie is a list of searchable FTP file names, The user must enter an exact file name to search, and then Archie will tell the user which FTP address can download the file. Therefore, Archie is the first program to automatically index anonymous FTP website files on the Internet, but it is not a real search engine. Because Archie is very popular Inspired by this, researchers at the University of Nevada developed a Gopher (Gopher FAQ) search tool Veronica (Veronica FAQ) in 1993 J ughead is another Gopher search tool later. Search engines are generally composed of spiders, index generators and query searchers. The "robot" program specially used to retrieve information crawls around the network like a spider, so the "robot" program of a search engine is called a "spider" program. The first Spider program in the world is the World Wide Web Wander developed by Matthew Gray of Massachusetts Institute of Technology. It is used to track the development scale of the Internet. At first, it was only used to count the number of servers on the Internet. Later, it developed to be able to capture URLs. In July 1994, Michael Mauldin of Carnegie Mellon University in the United States took John Leavitt's spider program into his indexing program and created Lycos. In April of the same year, David Filo, a doctoral student from Stanford University in the United States, and Jerry Yang, a Chinese American, jointly founded Yahoo, a super directory index, and successfully made the concept of search engine popular. Since then, search engines have entered a period of rapid development. At present, there are hundreds of search engines with names on the Internet, and the amount of information retrieved is also unparalleled. Take Google for example, the number of pages stored in its database has reached 3 billion! 2. Introduction to several search guides Here are some common search engines. (1)Google The interface of Google search engine is shown in Figure 6-18. Google was just a small project of Stanford University BackRub In 1995, Larry Page, a doctoral student, began to learn search engine design and registered with T Google on September 15, 1997 Com domain name. At the end of 1997, with the joint participation of Sergey Brin, Scott Hassan and Alan Steremberg, BachRub began to provide a demo version of Google. In February 1999, Google completed the transformation of T from Alpha to Beta. Google's innovations in integrated search, multilingual support, user interface and other functions, such as Page rank, dynamic summary, web snapshot, Daily refresh, multi document format support, map stock dictionary homing, have permanently changed the definition of search engine. The new version of Webster's Dictionary in 2006 contains more than 100 new words. In this dictionary, which has always been known for its conservatism and seriousness, the Internet search engine Google is included, which means to "quickly find information on the Internet". (2) Baidu At present, Baidu (www.baidu. com) is the world's largest Chinese search engine, and its interface is shown in Figure 6-19. In January 2000, Li Yanhong, a former senior engineer of Infoseek, and Xu Yong, a friend (postdoctoral fellow at the University of California, Berkeley) founded Baidu in Zhongguancun, Beijing. In May 2000, Baidu began to provide search technology services for portal websites (such as Sohu, Sina, etc.), and then released Baidu Com search engine, and began to provide independent search services. (3) Alltheweb Born in May 1999, Alltheweb is an excellent full-text search engine. In addition to searching regular web pages, it can also search news, pictures, videos, audio and other content. Its goal is to be the largest and fastest search engine in the world. Its interface is shown in Figure 6-20. (4) Ask. Com Ask. Com is a search engine that searches by asking questions. Users can enter a question and search for the desired answer. Its interface is shown in Figure 6-21 3. Classification of search references Search engines are often divided into full-text index engines, catalog indexes and meta search engines. (1) Full text search engine Full text search engine is a real search engine, with Google as the representative abroad and Baidu at home. They extract the information of each website from the Internet (mainly web text), establish a database, and can retrieve records matching the user's query conditions, and return results in a certain order. According to the different sources of search results, full-text search engines can be divided into two categories. One has its own retrieval program, namely "spider" program or "robot" program, which can build its own web database, and search results are directly called from its own database. Google and Baidu mentioned above belong to this category; The other is to rent the database of other search engines and arrange the search results in a customized format, such as Lycos search engine. (2) Catalog Index As the name implies, directory index is to store websites in the corresponding directory by category. Therefore, when querying information, users can select keyword search or search by category directory level by level. If you search by keyword, the returned results are the same as that of full-text search engines, which also arrange websites according to the degree of information relevance (more human factors are involved). If you search by hierarchical directory, the ranking of websites in a directory is determined by the order of the title letters (there are exceptions). Compared with full-text search engines, catalog indexing has many differences. First of all, the full-text search engine belongs to automatic website retrieval, while the establishment of directory index completely depends on manual operation. After the user submits the website, the directory editor will browse the user's website in person, and then decide whether to accept the user's website according to a set of self determined criteria and even the subjective impression of the editor. Secondly, when a search engine includes a website, as long as the website itself does not violate the relevant rules, it can generally log in successfully. However, the directory index has much higher requirements for the website, and sometimes even multiple logins may not succeed. In addition, when logging into search engines, people generally do not need to consider the classification of websites, but must put websites in the most appropriate directory when logging into the directory index. Finally, the relevant information of each website in the full-text search engine is automatically extracted from the user's webpage, so users have more autonomy; The directory index requires that the website information must be filled in manually, and there are various restrictions. What's more, if the staff thinks that the website directory and website information submitted by users are inappropriate. He can adjust it at any time. Of course, he will not discuss it with users in advance. At present, there is a trend of integration and penetration between search engines and directory indexes. Some original pure full-text search engines now also provide directory search. For example, Google borrows the Open Directory directory to provide classified queries. Like Yahoo! These old directory indexes expand the search scope through cooperation with Google and other search engines. (3) Meta search engine After receiving the user's query request, the meta search engine searches on multiple search engines at the same time and returns the results to the user. Famous meta search engines include InfoSpace, Dogfile, Vivisim. The typical representative of Chinese meta search engines is the Star Search Engine. In terms of search results arrangement, some search results are arranged directly by source, such as Dogfile, while others are rearranged and combined according to their own rules, such as Vivisimo. At present, search engines are in a stage of rapid development, and all major search engines are based on distributed computing. In short, a distributed system is a system composed of multiple servers working together to retrieve massive information. Take Google for example. It is composed of tens of thousands of servers to provide better retrieval and compression resistance. Compression resistance refers to the ability of the server to handle a large number of concurrent requests within a few seconds. At present, the main development space of search engine technology is the search accuracy. In addition to the traditional web page sorting algorithm (referring to the rules for sorting search results), such as Page rank, Hill top, etc., it is also closely related to the research progress of natural language.

Source Statement: This article is original or edited by Shangpin China's editors. If it needs to be reproduced, please indicate that it is from Shangpin China. The above contents (including pictures and words) are from the Internet. If there is any infringement, please contact us in time (010-60259772).